Overview

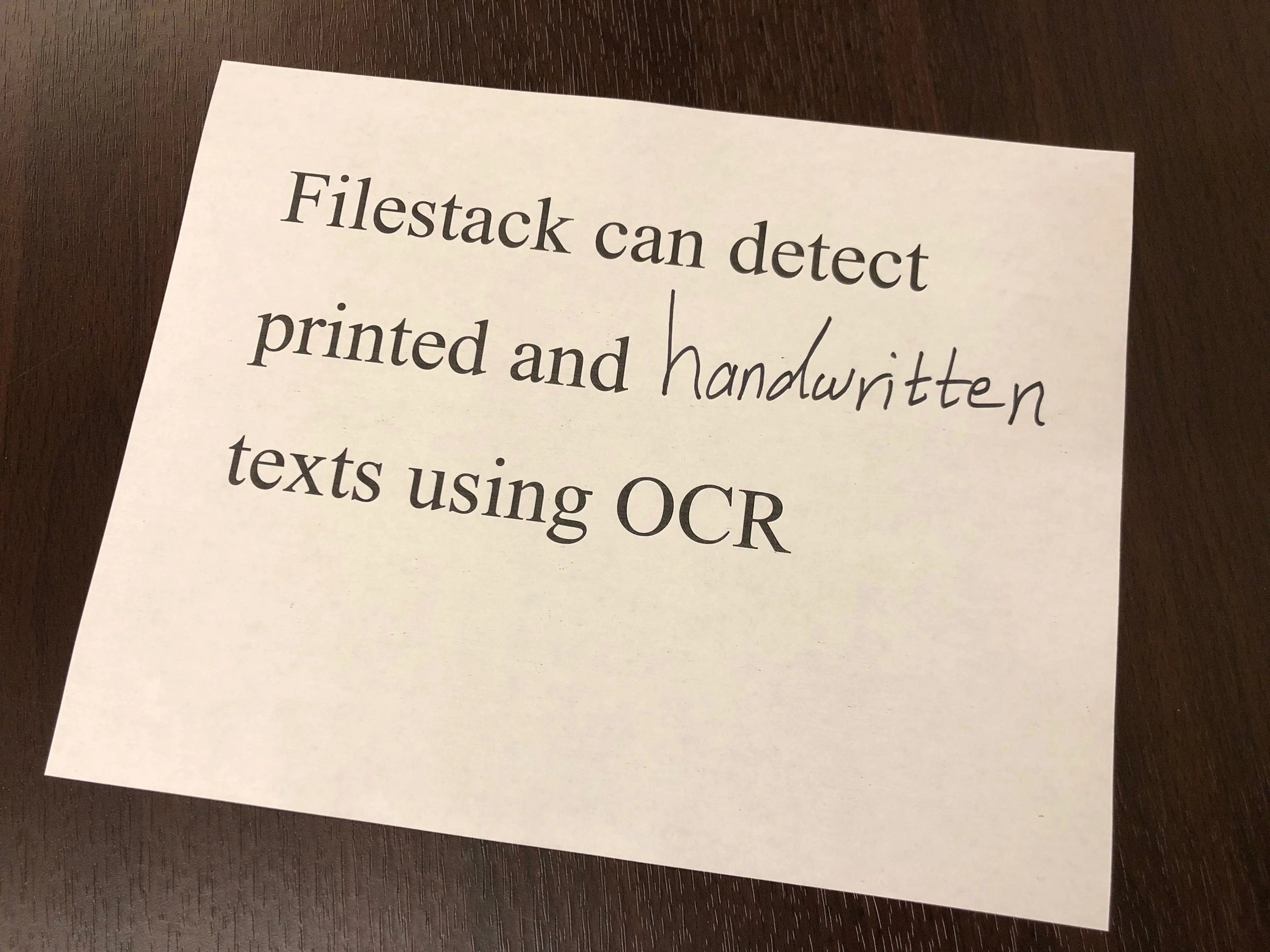

Optical Character Recognition (OCR) is one of the intelligence services of the Filestack platform. Using this service, you can detect both printed and handwritten texts in images. The result follows the standard JSON format containing all of the details regarding detected text areas, lines, and words.

Processing API

OCR is available as a synchronous operation in the Processing API using the following task:

ocr

Response

{

"document": {

"text_areas": [

{

"bounding_box": [

{

"x": 834,

"y": 478

},

{

"x": 3372,

"y": 739

},

{

"x": 3251,

"y": 1907

},

{

"x": 714,

"y": 1646

}

],

"lines": [

{

"bounding_box": [

{

"x": 957,

"y": 490

},

{

"x": 3008,

"y": 701

},

{

"x": 2977,

"y": 1009

},

{

"x": 925,

"y": 797

}

],

"text": "Filestack can detect",

"words": [

{

"bounding_box": [

{

"x": 957,

"y": 490

},

{

"x": 1833,

"y": 580

},

{

"x": 1802,

"y": 888

},

{

"x": 925,

"y": 797

}

],

"text": "Filestack"

},

{

"bounding_box": [

{

"x": 1916,

"y": 589

},

{

"x": 2266,

"y": 625

},

{

"x": 2235,

"y": 932

},

{

"x": 1884,

"y": 896

}

],

"text": "can"

},

{

"bounding_box": [

{

"x": 2336,

"y": 632

},

{

"x": 3008,

"y": 701

},

{

"x": 2977,

"y": 1009

},

{

"x": 2304,

"y": 939

}

],

"text": "detect"

}

]

},

{

"bounding_box": [

{

"x": 860,

"y": 858

},

{

"x": 3330,

"y": 1049

},

{

"x": 3301,

"y": 1421

},

{

"x": 831,

"y": 1229

}

],

"text": "printed and handwritten",

"words": [

{

"bounding_box": [

{

"x": 860,

"y": 858

},

{

"x": 1550,

"y": 912

},

{

"x": 1521,

"y": 1283

},

{

"x": 831,

"y": 1229

}

],

"text": "printed"

},

{

"bounding_box": [

{

"x": 1677,

"y": 922

},

{

"x": 2047,

"y": 951

},

{

"x": 2018,

"y": 1321

},

{

"x": 1648,

"y": 1292

}

],

"text": "and"

},

{

"bounding_box": [

{

"x": 2107,

"y": 954

},

{

"x": 3330,

"y": 1049

},

{

"x": 3301,

"y": 1421

},

{

"x": 2078,

"y": 1326

}

],

"text": "handwritten"

}

]

},

{

"bounding_box": [

{

"x": 749,

"y": 1305

},

{

"x": 2504,

"y": 1486

},

{

"x": 2469,

"y": 1826

},

{

"x": 714,

"y": 1645

}

],

"text": "texts using OCR",

"words": [

{

"bounding_box": [

{

"x": 749,

"y": 1305

},

{

"x": 1233,

"y": 1355

},

{

"x": 1198,

"y": 1695

},

{

"x": 714,

"y": 1645

}

],

"text": "texts"

},

{

"bounding_box": [

{

"x": 1317,

"y": 1364

},

{

"x": 1910,

"y": 1425

},

{

"x": 1875,

"y": 1765

},

{

"x": 1282,

"y": 1704

}

],

"text": "using"

},

{

"bounding_box": [

{

"x": 1972,

"y": 1431

},

{

"x": 2504,

"y": 1486

},

{

"x": 2469,

"y": 1826

},

{

"x": 1937,

"y": 1771

}

],

"text": "OCR"

}

]

}

],

"text": "Filestack can detect\nprinted and handwritten\ntexts using OCR"

}

]

},

"text": "Filestack can detect\nprinted and handwritten\ntexts using OCR\n",

"text_area_percentage": 23.40692449819434

}Response Parameters

| document | Includes all information about the OCR response. | |

| text_areas | Includes all of the extracted information regarding text blocks with their bounding boxes. | |

| lines | List of detected text lines with their corresponding bounding boxes. | |

| bounding_box | List of coordinates for detected text blocks, text lines, or words. | |

| text | Extracted texts in blocks, lines, or words. | |

| text_area_percentage | How much percent of the image is covered by text. |

Examples

Get the OCR response on your image:

https://cdn.filestackcontent.com/security=p:<POLICY>,s:<SIGNATURE>/ocr/<HANDLE>Use ocr in a chain with other tasks such as doc_detection:

https://cdn.filestackcontent.com/security=p:<POLICY>,s:<SIGNATURE>/doc_detection=coords:false,preprocess:true/ocr/<HANDLE>Use ocr with an external URL:

https://cdn.filestackcontent.com/<FILESTACK_API_KEY>/security=p:<POLICY>,s:<SIGNATURE>/ocr/<EXTERNAL_URL>Use ocr with Storage Aliases:

https://cdn.filestackcontent.com/<FILESTACK_API_KEY>/security=p:<POLICY>,s:<SIGNATURE>/ocr/src://<STORAGE_ALIAS>/<PATH_TO_FILE>

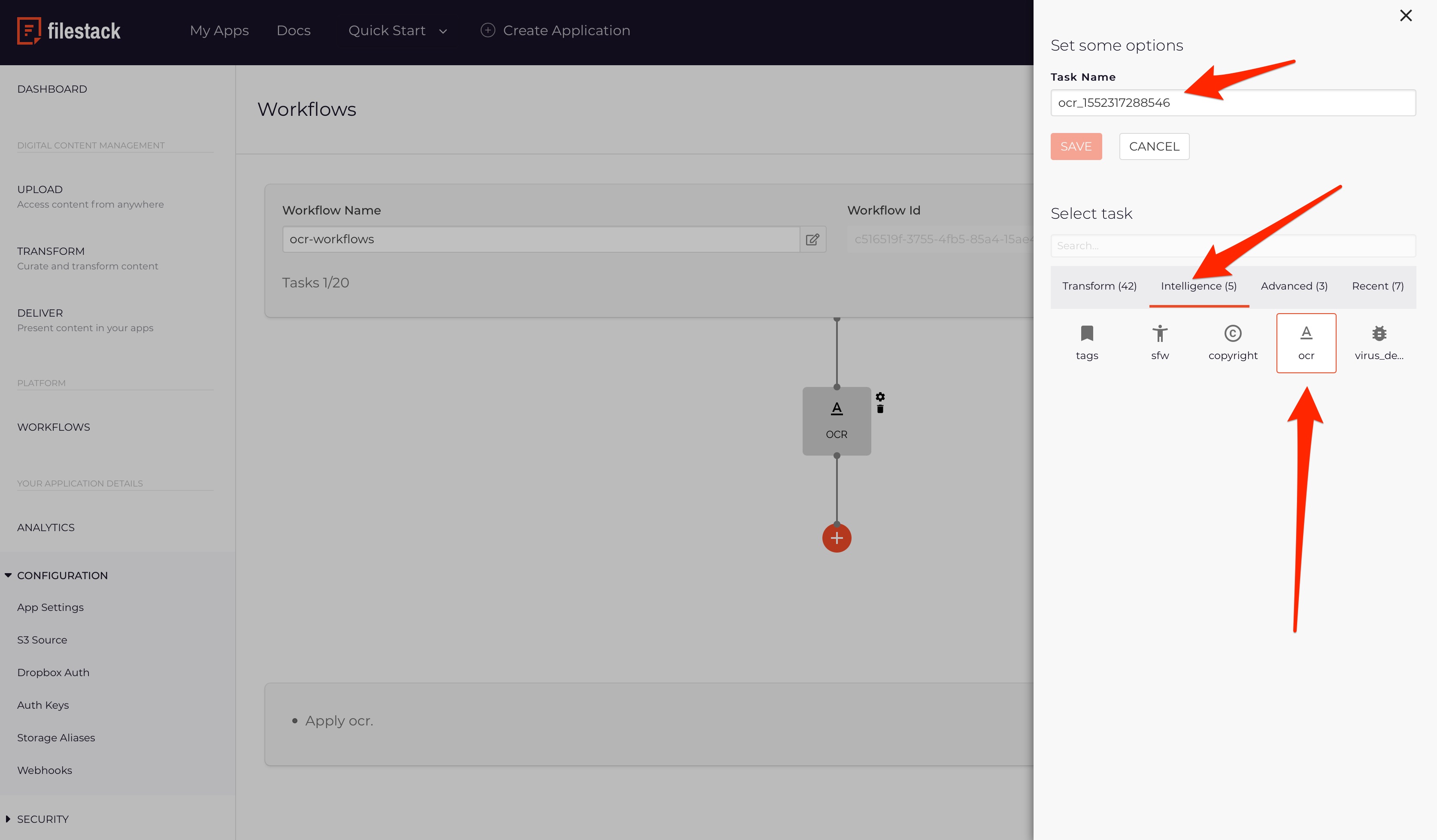

Workflows Task Configuration

First, you may need to follow Creating Workflows Tutorial to learn how to use Workflows UI in order to configure tasks and logic between them.

OCR task is available under Intelligence tasks category.

Workflows Parameters

| Task Name | Unique name of the task. It will be included in the webhook response. |

Logic

OCR task returns the following response to the workflow:

{

"data": {

"document": {

"text_areas": [

{

"bounding_box": [

{

"x": "horizontal coordinate of top left edge",

"y": "vertical coordinate of top left edge"

},

{

"x": "horizontal coordinate of top right edge",

"y": "vertical coordinate of top right edge"

},

{

"x": "horizontal coordinate of bottom right edge",

"y": "vertical coordinate of bottom right edge"

},

{

"x": "horizontal coordinate of bottom left edge",

"y": "vertical coordinate of bottom left edge"

}

"detected bounding box of text area"

],

"lines": [

{

"bounding_box": [

"detected bounding box of the line"

],

"text": "detected texts",

"words": [

{

"bounding_box": [

"detected bounding box"

],

"text": "detected text"

}

]

}

]

}

],

"text": "detected texts in the text block"

},

"text": "total extracted texts in document",

"text_area_percentage": 23.40692449819434

}

}Logic Parameters

| data | Main header of the response. | |

| document | Includes all information about the OCR response. | |

| text_areas | Includes all of the extracted information regarding text blocks with their bounding boxes. | |

| lines | List of detected text lines with their corresponding bounding boxes. | |

| bounding_box | List of coordinates for detected text blocks, text lines, or words. | |

| text | Extracted texts in blocks, lines, or words. | |

| text_area_percentage | How much percent of the image is covered by text. |

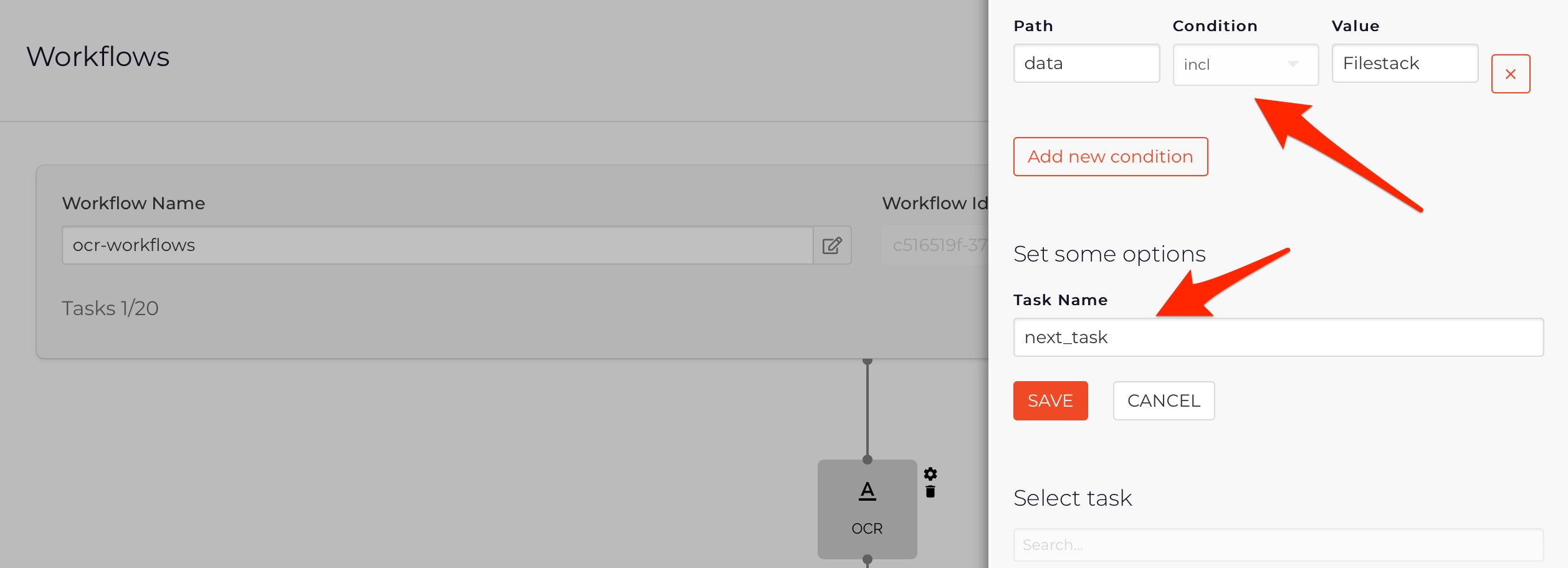

Based on the task’s response, you can build logic that tells the workflow how dependent tasks should be executed. For example, if you would like to run another task in case that OCR detects a specific word, e.g. “Filestack”, you can implement it like the following rule:

data incl "Filestack"

In Workflows UI, this command would look like in the example below:

Visit Creating Workflows Tutorial to learn how you can use Workflows UI to configure your tasks and the logic between them.

Webhook

Below you can find an example webhook payload for an OCR task on a sample image:

OCR detailed response:

{ "id": 67410035, "action": "fs.workflow", "timestamp": 1552323363, "text": { "workflow": "c516519f-3755-4fb5-85a4-15ae4b31d475", "createdAt": "2019-03-11T16:55:03.628618436Z", "updatedAt": "2019-03-11T16:55:06.355204948Z", "sources": [ "T1pEX4QKSPW99Oi73OlW" ], "results": { "ocr_1552317288546": { "data": { "document": { "text_areas": [ { "bounding_box": [ { "x": 834, "y": 478 }, { "x": 3372, "y": 739 }, { "x": 3251, "y": 1907 }, { "x": 714, "y": 1646 } ], "lines": [ { "bounding_box": [ { "x": 957, "y": 490 }, { "x": 3008, "y": 701 }, { "x": 2977, "y": 1009 }, { "x": 925, "y": 797 } ], "text": "Filestack can detect", "words": [ { "bounding_box": [ { "x": 957, "y": 490 }, { "x": 1833, "y": 580 }, { "x": 1802, "y": 888 }, { "x": 925, "y": 797 } ], "text": "Filestack" }, { "bounding_box": [ { "x": 1916, "y": 589 }, { "x": 2266, "y": 625 }, { "x": 2235, "y": 932 }, { "x": 1884, "y": 896 } ], "text": "can" }, { "bounding_box": [ { "x": 2336, "y": 632 }, { "x": 3008, "y": 701 }, { "x": 2977, "y": 1009 }, { "x": 2304, "y": 939 } ], "text": "detect" } ] }, { "bounding_box": [ { "x": 860, "y": 858 }, { "x": 3330, "y": 1049 }, { "x": 3301, "y": 1421 }, { "x": 831, "y": 1229 } ], "text": "printed and handwritten", "words": [ { "bounding_box": [ { "x": 860, "y": 858 }, { "x": 1550, "y": 912 }, { "x": 1521, "y": 1283 }, { "x": 831, "y": 1229 } ], "text": "printed" }, { "bounding_box": [ { "x": 1677, "y": 922 }, { "x": 2047, "y": 951 }, { "x": 2018, "y": 1321 }, { "x": 1648, "y": 1292 } ], "text": "and" }, { "bounding_box": [ { "x": 2107, "y": 954 }, { "x": 3330, "y": 1049 }, { "x": 3301, "y": 1421 }, { "x": 2078, "y": 1326 } ], "text": "handwritten" } ] }, { "bounding_box": [ { "x": 749, "y": 1305 }, { "x": 2504, "y": 1486 }, { "x": 2469, "y": 1826 }, { "x": 714, "y": 1645 } ], "text": "texts using OCR", "words": [ { "bounding_box": [ { "x": 749, "y": 1305 }, { "x": 1233, "y": 1355 }, { "x": 1198, "y": 1695 }, { "x": 714, "y": 1645 } ], "text": "texts" }, { "bounding_box": [ { "x": 1317, "y": 1364 }, { "x": 1910, "y": 1425 }, { "x": 1875, "y": 1765 }, { "x": 1282, "y": 1704 } ], "text": "using" }, { "bounding_box": [ { "x": 1972, "y": 1431 }, { "x": 2504, "y": 1486 }, { "x": 2469, "y": 1826 }, { "x": 1937, "y": 1771 } ], "text": "OCR" } ] } ], "text": "Filestack can detect\nprinted and handwritten\ntexts using OCR" } ] }, "text": "Filestack can detect\nprinted and handwritten\ntexts using OCR\n", "text_area_percentage": 23.40692449819434 } } }, "status": "Finished" } }

Please visit the webhooks documentation to learn more.